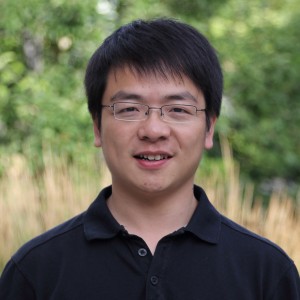

Jingyi Yu is currently the Faculty Search Committee Directora and the SIST Tenure & Promotion Committee Director. Prof. Jingyi Yu is a professor and executive dean of the School of Information Science and Technology at ShanghaiTech University. His research interests span a range of topics in computer vision and computer graphics, especially on computational photography and non-conventional optics and camera designs.